Interpreting Evo 2: Arc Institute's Next-Generation Genomic Foundation Model

Authors

*Goodfire

Published

Feb. 20, 2025

We're thrilled to announce our collaboration with Arc Institute, a nonprofit research organization pioneering long-context biological foundation models (the "Evo" series). Through our partnership, we've developed methods to understand their model with unprecedented precision, enabling the extraction of meaningful units of model computation (i.e., features1Features are interpretable patterns we extract from neural network neuron activity, revealing how the model processes information. They represent meaningful concepts that emerge from complex neural interactions - like a model's understanding of 'α-helices'). Preliminary experiments have shown promising directions for steering these features to guide DNA sequence generation, though this work is still in its early stages.

Introducing "Interpretable" Evo 2

Today, Arc has announced their next-generation biological foundation model, Evo 2, featuring 7B and 40B parameter architectures capable of processing sequences up to 1M base pairs at nucleotide level resolution. Trained across all domains of life, it enables both prediction and generation across various biological complexity levels. Through our collaboration, Goodfire and Arc have made exciting progress in applying interpretability techniques to Evo 2, discovering numerous biologically relevant features in the models, ranging from semantic elements like exon-intron boundaries2Exon-intron boundaries are crucial junctions in genes where protein-coding sequences (exons) meet non-coding sequences (introns). These sites guide RNA splicing and mutations here can disrupt proper protein production, causing diseases. to higher level concepts such as protein secondary structure3Protein secondary structure refers to local folding patterns (mainly α-helices and beta sheets) that form as proteins fold. These patterns are essential for determining the protein's final shape and function..

Why This Matters

Biological foundation models represent a unique challenge and opportunity for AI interpretability. Unlike language models that process human-readable text, these neural networks operate on DNA sequences—a biological code that even human experts struggle to directly read and understand. Evo 2 works with an especially complex version of this challenge, processing multiple layers of biological information: from raw DNA sequences to the proteins they encode, and the intricate RNA structures they form. By applying state-of-the-art interpretability techniques (similar to those detailed in our Understanding and Steering Llama 3 paper), we hope to:

- Extract and analyze previously hidden biological knowledge within these models, potentially revealing novel mechanisms and patterns

- Enable biologists to conduct targeted experiments at unprecedented scale through controlled DNA sequence generation

This interpretability breakthrough could deepen our understanding of biological systems while enabling new approaches to genome engineering. These advances open possibilities for developing better disease treatments and improving human health.

We provide a high level overview of the work we've done below. The mechanistic interpretability sections (2.4, 4.4) of the preprint contain more detailed information on our findings.

Interpreter model overview

Training an Evo 2 interpreter model (sparse autoencoder or SAE)

In our collaboration with Arc, we trained BatchTopK sparse autoencoders (SAEs) on layer 26 of Evo 2, applying techniques we've developed while interpreting language models [1]Goodfire Ember: Scaling Interpretability for Frontier Model Alignment [link]

Balsam, e.a., 2024. [2]Understanding and Steering Llama 3 with Sparse Autoencoders [link]

McGrath, e.a., 2024.. Working closely with Arc Institute scientists, we used these tools to understand how Evo 2 processes genetic information internally.

We discovered a wide range of features corresponding to sophisticated biological concepts. We also validated the relevance of many of these features with a large-scale alignment analysis between canonical biological concepts and SAE features (quantified by measuring the domain-F1 score between features and concepts [3]InterPLM: Discovering Interpretable Features in Protein Language Models via Sparse Autoencoders

Simon, E. and Zou, J., 2024.).

We have some early signs of life on steering Evo 2 to precisely engineer new protein structures, but steering this model is considerably more complex than steering a language model. Further research is needed to unlock the full potential of this approach. The potential impact of steering Evo 2 is particularly significant: while language models can be prompted to achieve desired behaviors, a model that 'only speaks nucleotide' cannot. Learning to steer through features would unlock entirely new capabilities.

Why did we switch to BatchTopK?

We trained both standard ReLU SAEs [4]Sparse Autoencoders Find Highly Interpretable Features in Language Models [link]

Huben, R., Cunningham, H., Smith, L.R., Ewart, A. and Sharkey, L., 2024. The Twelfth International Conference on Learning Representations. [5]Towards Monosemanticity: Decomposing Language Models With Dictionary Learning

Bricken, T., Templeton, A., Batson, J., Chen, B., Jermyn, A., Conerly, T., Turner, N., Anil, C., Denison, C., Askell, A., Lasenby, R., Wu, Y., Kravec, S., Schiefer, N., Maxwell, T., Joseph, N., Hatfield-Dodds, Z., Tamkin, A., Nguyen, K., McLean, B., Burke, J.E., Hume, T., Carter, S., Henighan, T. and Olah, C., 2023. Transformer Circuits Thread. and BatchTopK SAEs (a variant of TopK SAEs [6]Scaling and evaluating sparse autoencoders

Gao, L., Tour, T.D.l., Tillman, H., Goh, G., Troll, R., Radford, A., Sutskever, I., Leike, J. and Wu, J., 2024.) on a later layer (layer 26) of Evo 2. Initially, we were concerned about high-frequency features in our SAEs. However, since we didn't observe any during training, we switched to the BatchTopK variant. As with conventional SAEs, the architecture is

However, the nonlinearity $\sigma(\cdot)$ is the TopK operation over the entire batch of inputs (with batch size $B$):

where the $\mathrm{TopK}(x, k)$ operation sets all but the top $k$ elements of $x$ to zero. This allows variable capacity per-token, but retains the loss improvements, computational efficiency and ease of hyperparameter selection that are the advantages of TopK SAEs. Training loss was superior to ReLU and features appeared equally crisp. We give some examples of interesting, biologically-relevant features below.

We chose an even mix of eukaryotic and prokaryotic data in order to find both domain-specific and generalizing features. Because SAEs obtain interesting and useful features with relatively little data compared to foundation models, we were able to make use of only high-quality reference genomes from both domains.

Why layer 26?

We trained SAEs on multiple layers of the model, including both Transformer and StripedHyena layers. Layer 26 (a StripedHyena layer) had the most interesting biologically-relevant features on an initial inspection, so we focused additional effort on studying this layer.

Evo 2 7B has 32 layers, so layer 26 is relatively late compared to our natural language model SAEs. This might be due to the much lower vocabulary size: a nucleotide-level model of the genome has very few tokens in its vocabulary (primarily A, T, C, G, though some additional tokens are also used during training) compared to the very large vocabulary size of a natural language model. This might mean that once the relevant patterns have been computed, fewer layers of the model are then required to both select the relevant next token and correctly calibrate probabilities between plausible options.

Deciphering Evo 2's latent space

Visualizing interpretable features from our Evo 2 SAE

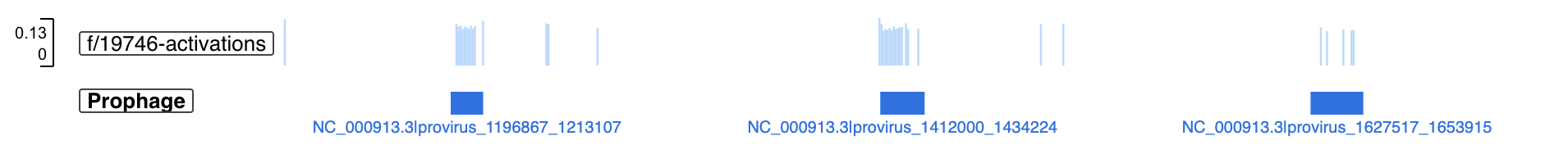

To make these features more tangible, we built an interactive feature visualizer showcasing some example SAE features as they relate to known biological concepts. Here, you can see activation values of these features across a set of bacterial reference genomes and how they align with existing genomic annotations.

For example, we discovered that the model has learned to identify several key biological concepts, including:

- Coding sequences (the parts of DNA that contain instructions for building proteins)

- Protein secondary structure (common shapes that proteins fold into such as α-helices and β-sheets)

- tRNA/rRNAs (RNA molecules involved in protein synthesis)

- Viral derived sequences (prophage and CRISPR elements)

Annotating a bacterial genome

When looking at well-annotated regions of the E. Coli genome, we found that not only could the model recognize gene structure and RNA segments, it also learned more abstract concepts such as protein secondary structure. In the figure below, activation values for features associated with α-helices, β-sheets, and tRNAs are shown for a region containing a tRNA array and the tufB gene. On the right, these feature activations are overlaid on AlphaFold3's [7]Accurate structure prediction of biomolecular interactions with AlphaFold 3

Abramson, J., Adler, J., Dunger, J. and others,, 2024. Nature, Vol 630, pp. 493--500. DOI: 10.1038/s41586-024-07487-w structural prediction of this protein-RNA complex, demonstrating Evo 2's deep understanding of how DNA sequence affects downstream RNA and protein products and how we can decompose this understanding into clear, interpretable components.

Additional noteworthy examples

You can reference Figure 4 in the preprint to learn more about the most salient features we discovered across the various scales of biological complexity.

Looking Ahead

This announcement provides a high-level overview of our work with Arc Institute. We're currently working to publish a more comprehensive study in the coming months that will detail our interpretability methodology.

We're excited about AI interpretability's potential to accelerate meaningful scientific discovery. If you're working on scientific foundation models, we'd love to explore how we can help interpret them.

Relevant Links

Citation

L. Gorton, N. Wang, N. Nguyen, M. Deng, E. Ho, D. Balsam, and T. McGrath, "Interpreting Evo 2: Arc Institute's Next-Generation Genomic Foundation Model," Goodfire, Feb. 20, 2025. [Online]. Available: https://www.goodfire.ai/research/interpreting-evo-2

Footnotes

- Features are interpretable patterns we extract from neural network neuron activity, revealing how the model processes information. They represent meaningful concepts that emerge from complex neural interactions - like a model's understanding of 'α-helices'

- Exon-intron boundaries are crucial junctions in genes where protein-coding sequences (exons) meet non-coding sequences (introns). These sites guide RNA splicing and mutations here can disrupt proper protein production, causing diseases.

- Protein secondary structure refers to local folding patterns (mainly α-helices and beta sheets) that form as proteins fold. These patterns are essential for determining the protein's final shape and function.

References

- Goodfire Ember: Scaling Interpretability for Frontier Model Alignment [link]

Balsam, e.a., 2024. - Understanding and Steering Llama 3 with Sparse Autoencoders [link]

McGrath, e.a., 2024. - InterPLM: Discovering Interpretable Features in Protein Language Models via Sparse Autoencoders

Simon, E. and Zou, J., 2024. - Sparse Autoencoders Find Highly Interpretable Features in Language Models [link]

Huben, R., Cunningham, H., Smith, L.R., Ewart, A. and Sharkey, L., 2024. The Twelfth International Conference on Learning Representations. - Towards Monosemanticity: Decomposing Language Models With Dictionary Learning

Bricken, T., Templeton, A., Batson, J., Chen, B., Jermyn, A., Conerly, T., Turner, N., Anil, C., Denison, C., Askell, A., Lasenby, R., Wu, Y., Kravec, S., Schiefer, N., Maxwell, T., Joseph, N., Hatfield-Dodds, Z., Tamkin, A., Nguyen, K., McLean, B., Burke, J.E., Hume, T., Carter, S., Henighan, T. and Olah, C., 2023. Transformer Circuits Thread. - Scaling and evaluating sparse autoencoders

Gao, L., Tour, T.D.l., Tillman, H., Goh, G., Troll, R., Radford, A., Sutskever, I., Leike, J. and Wu, J., 2024. - Accurate structure prediction of biomolecular interactions with AlphaFold 3

Abramson, J., Adler, J., Dunger, J. and others,, 2024. Nature, Vol 630, pp. 493--500. DOI: 10.1038/s41586-024-07487-w